Artists Fight Back Against AI With New Data Poisoning Tool

By Tim Busbey

Tim Busbey is a business and technology journalist from Ohio, who brings diverse writing experience to the Cronicle team. He works on our Cronicle tech and business blog and with our Cronicle content marketing clients.

Artists Fight Back Against AI With New Data Poisoning Tool

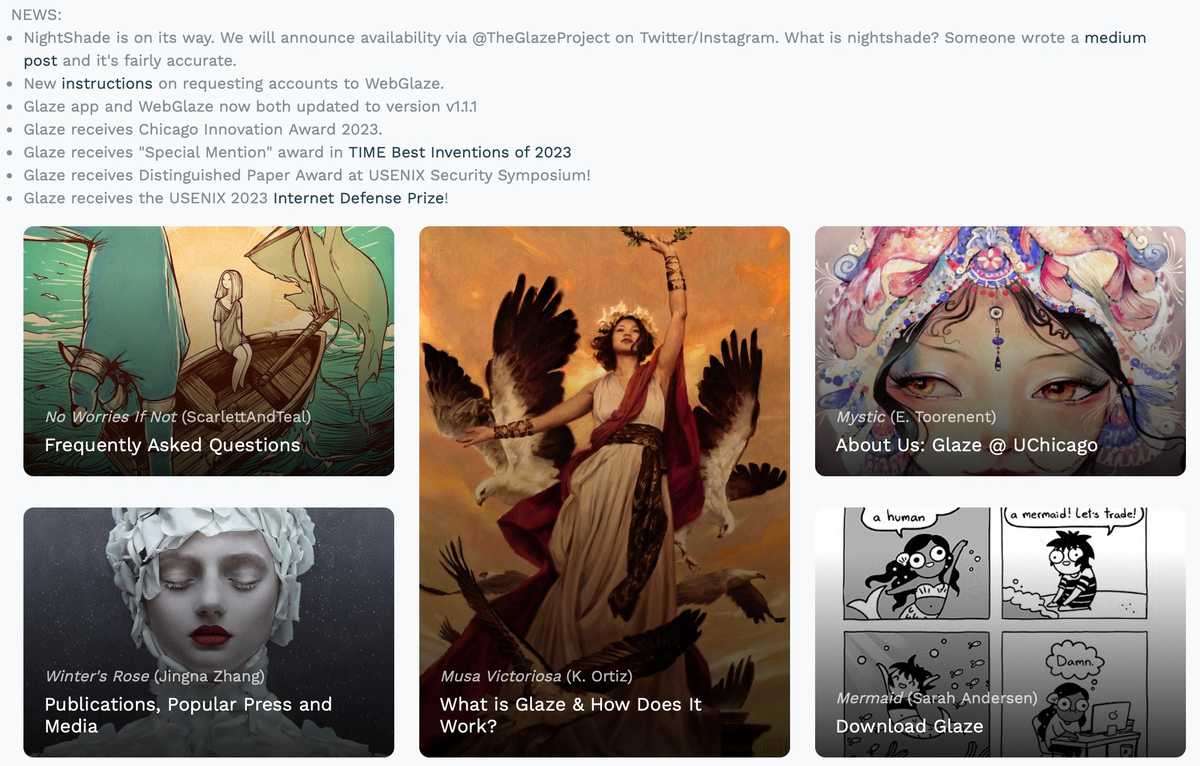

A new data poisoning tool known as Nightshade is giving artists an avenue to fight back against artificial intelligence using their creations without permission. This data poisoning tool has serious potential to disrupt the data grab of AI startups scraping content without permission or compensation to train their high-profit gen AI tools. Nightshade also will be integrated with the tool Glaze, which similarly allows artists to protect their style against being scraped by AI engines.

The rapid advancement of generative AI has been met with both enthusiasm and skepticism. While some view these systems as a new frontier in artistic creation, others see them as a threat to human creativity, promoting a world in which AI creates art and humans do mundane work rather than the flip opposite that most people would prefer. Artists argue that algorithms, although efficient, lack the emotional depth and complexity that human-made art often embodies. It is within this landscape of mixed opinions that data poisoning tools like Nightshade are making their mark.

Nightshade is a data poisoning tool specifically designed to target generative AI systems that create art, including DALL-E, Midjourney or Stable Diffusion. Developed by a collective of artists and technologists, Nightshade operates by introducing what seems to be legitimate data into the AI's training dataset. However, this data has been subtly altered or "poisoned" to impair the AI's generative abilities. Once the AI incorporates this poisoned data, the result is often distorted, subpar artwork that fails to meet the aesthetic standards usually expected.

The tool has gained notoriety as a form of creative resistance, embodying the frustrations of artists who feel overshadowed by machine-generated art. By utilizing Nightshade, these artists aim to level the playing field, disrupting the generative algorithms that they think are diluting the human element in art. It acts as digital disobedience, questioning who or what can be considered an "artist" in this evolving technological landscape.

"We assert that Nightshade can provide a powerful tool for content owners to protect their intellectual property against model trainers that disregard or ignore copyright notices, do-not-scrape/crawl directives, and opt-out lists," the researchers from the University of Chicago wrote in their report. "Movie studios, book publishers, game producers and individual artists can use systems like Nightshade to provide a strong disincentive against unauthorized data training."

Nightshade will be open to all developers as well as being integrated into the Glaze tool.

While Nightshade is celebrated by some for its audacity and its stand against the mechanization of art, it also raises critical ethical questions. Is it right to intentionally sabotage a system, especially when generative AI has the potential for constructive applications as well? This dual nature of Nightshade places it at the heart of a growing debate between technological progress and ethical considerations.

Ethical Implications of Data Poisoning Tools

The use of data poisoning tools like Nightshade adds another layer of complexity to the ethical landscape of AI. Intentionally sabotaging an AI system can be viewed through multiple ethical lenses, each with its own considerations and consequences.

Human vs. Machine Creativity

One of the core ethical debates centers around the preservation of human creativity in the face of rapid technological advancements. Supporters of data poisoning tools argue that these acts serve as a form of resistance against the commercialization and mechanization of art. They worry that the human element—emotion, depth, and nuance—is getting lost in the rush to embrace AI-driven creative processes. In their view, data poisoning serves as a safeguard for the intrinsic value of human artistry.

Potential for Harm

On the flip side, intentionally manipulating data to disrupt AI systems could have broader, unintended consequences. These acts of sabotage may not only impact generative art but could potentially be adapted to target other forms of AI that serve crucial roles in society. Think of medical diagnostic systems, self-driving cars, or fraud detection mechanisms. If the principles of data poisoning are applied to these domains, the ethical stakes become exponentially higher.

A Complex Relationship

The relationship between artists and AI technologies is far from simple. Artists, with their intrinsic need for creative expression, often view AI as both a tool and a threat. On one hand, AI offers new avenues for artistic creation, such as data-driven installations and interactive experiences. On the other hand, it presents a challenge to the traditional concepts of authorship, originality, and value in the art world.

The Allure and Danger of AI in Art

Artists are intrigued by the possibilities that AI provides, whether it's the use of algorithms to interpret large datasets for creating art or the automation of certain design processes. This allure is counterbalanced by the potential risks that AI poses, including the dilution of individual creative expression. Nightshade and similar data poisoning tools embody this tension; they are a reaction against the feeling that technology is beginning to overshadow human capability and intent with virtually no accountability for impact on human lives, climate, or other aspects of the world we live in.

Co-creation and Conflict

Another factor to consider is the potential for human-AI co-creation. There are artists who collaborate with AI systems to produce work that neither could create independently. These collaborations yield art that is often groundbreaking and expands the boundaries of what is considered possible. However, the introduction of data poisoning tools in this context raises ethical questions about the integrity of such partnerships. Is it right to manipulate a co-creator, even if it is a machine?

Ownership and Attribution

In the realm of AI-generated art, the lines around ownership and attribution are increasingly blurred. If an artist uses a data poisoning tool like Nightshade to alter an AI-generated piece of art, who owns the final product? And who should be credited for the work? These questions add further complexity to the already intricate relationship between artists and AI.

Emotional and Ethical Investment

Artists invest emotional labor and ethical considerations into their work, while AI, being devoid of feelings, operates on algorithms and data. This inherent difference adds to the complexity, as artists must reconcile their emotional and ethical investment in the craft with the more mechanical, data-driven nature of AI-generated art.

The rise of data poisoning tools as a form of resistance against generative AI is a symptom of broader ethical and legal questions that society must address. These debates are likely to intensify as AI technologies become increasingly integrated into our daily lives. It's a multidimensional issue, requiring insights from technologists, legal experts, ethicists, and artists themselves to find a balanced path forward.

Whether considered as ethical resistance or digital tampering, data poisoning tools underscore the urgent need for responsible AI development and governance. As we navigate this complex landscape, it will be crucial to consider all viewpoints in developing a fair and ethical approach to AI and art.

For more on Nightshade and its implications, check out these articles:

https://www.axios.com/2023/10/27/ai-poison-nightshade-license-dall-e-midjourney

https://news.artnet.com/art-world/nightshade-ai-data-poisoning-tool-2385715

https://www.theverge.com/2023/10/25/23931592/generative-ai-art-poison-midjourney

Want to try Glaze? You can request an invite here: https://glaze.cs.uchicago.edu/webinvite.html

tech news, AI, artificial intelligence, business news, publishing AI